A third of children have false social media age of 18+

Yonder Consulting found that the majority of children aged between 8 and 17 (77%) who use social media now have their own profile on at least one of the large platforms. And despite most platforms having a minimum age of 13, the research suggests that 6 in 10 (60%) children aged 8 to 12 who use these platforms are signed up with their own profile.

Among this underage group (8 to 12s), up to half had set up at least one of their profiles themselves, while up to two-thirds had help from a parent or guardian.

Why does a child's online age matter?

When a child self-declares a false age to gain access to social media or online games, as they get older, so does their claimed user age. This means they could be placed at greater risk of encountering age-inappropriate or harmful content online. Once a user reaches age 16 or 18, some platforms, for example, introduce certain features and functionalities not available to younger users – such as direct messaging and the ability to see adult content.

Yonder’s study sought to estimate the proportion of children that have social media profiles with ‘user ages’ that make them appear older than they actually are. The findings suggest that almost half (47%) of children aged 8 to 15 with a social media profile have a user age of 16+, while 32% of children aged 8 to 17 have a user age of 18+.

Among the younger, 8 to 12s age group, the study estimated that two in five (39%) have a user age profile of a 16+ year old, while just under a quarter (23%) have a user age of 18+.

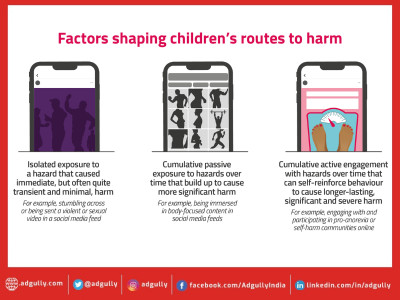

Risk factors that can lead children to harm online

In line with our duty to promote and research media literacy, and as set out in our roadmap to online safety regulation, we are publishing a series of research reports designed to further build our evidence base as we prepare to implement the new online safety laws.[1] Given the protection of children sits at the core of the regime, today’s wave of research crucially explores children’s experiences of harm online, as well as understanding children’s and parents’ attitudes towards certain online protections.

Commissioned by Ofcom and carried out by Revealing Reality, a second, broader study into the risk factors that may lead children to harm online (PDF, 826.8 KB) found that providing a false age was only one of many potential triggers.

A range of risk factors were identified which potentially made children more vulnerable to online harm, especially when these factors appear to coincide or frequently co-occur with the harm experienced. These included:

- a child’s pre-existing vulnerabilities such as any special educational needs or disabilities (SEND), existing mental health conditions and social isolation;

- offline circumstances such as bullying or peer pressure, feelings such as low self-esteem or poor body image;

- design features of platforms which either encouraged and enabled children to build large networks of people – often that they didn’t know; or exposed them to content and connections they hadn’t proactively sought out; and

- exposure to personally relevant, targeted, or peer-produced content, and material that was appealing as it was perceived as a solution to a problem or insecurity.

The study indicated that the severity of any impact can vary between children. This ranged from minimal transient emotional upset (such as confusion or anger), temporary behaviour-change or deep emotional impact (such as physical aggression or short-term food restriction), to far-reaching, severe psychological and physical harm (such as social withdrawal or acts of self-harm).

Children's and parents' attitudes towards age assurance

A third research study published today – commissioned jointly by Ofcom and the Information Commissioner’s Office under our DRCF programme of work – delves deeper into children’s and parents’ attitudes towards age assurance.[2]

Age assurance is a broad term encompassing a range of techniques designed to prevent children from accessing adult, harmful, or otherwise inappropriate content, and to help platforms tailor content and features to provide an age-appropriate experience. It encapsulates measures such as self-declaration (as discussed above), hard identifiers such as passports, and AI and biometric-based systems among others.

It finds that parents and children are broadly supportive of the principle of age assurance, but also identifies that some methods raise concerns about privacy, parental control, children’s autonomy and usability.

Parents told us that they were concerned with keeping their children safe online, but equally wanted them to learn how to manage risks independently through experience. Many also didn’t want their children to be left out of online activities that their peers are allowed to take part in, and others felt that their children’s level of maturity, rather than simply their numerical age, was a primary consideration in the freedom they had.

Parents felt that the effort required for an age assurance method should be proportionate to their perceived potential risks. Both parents and children leaned towards “hard identifiers”, such as passports, for traditionally age-restricted activities like gambling or accessing pornography.

Social media and gaming tended to be perceived as comparatively less risky. Children tended to prefer a ‘self-declaration’ method of age assurance for these platforms and services, due to the perceived ease of circumvention and desire to use them without restrictions.

Some parents felt that minimum age restrictions for social media and games were quite arbitrary, and, as we highlight above, facilitated their child’s access. When prompted with different age assurance methods for social media and games, parents often preferred “parental confirmation” as they considered it afforded them both control and flexibility.

The Online Safety Bill

The Online Safety Bill will require Ofcom to assess and publish findings about the risks to children of harmful content they may encounter online.

It will also require in-scope services that are likely to be accessed by children to assess the risks of harm to youngsters who use their service, and to put in place proportionate systems and processes to mitigate and manage these risks.

We already regulate UK-established video sharing platforms and will shortly publish our key findings from our first full year of regulation. This report will focus on the measures that platforms have in place to protect users, including children, from harmful material and set out our strategy for the year ahead.

Notes to editors

- Research methodologies:

- Yonder Consulting: Children’s online user ages quantitative research study (PDF, 847.2 KB). Fieldwork was completed with a sample of 1,039 social media users of six popular platforms, aged 8-17. Platforms covered in the study were: Facebook, Instagram, TikTok, Snapchat, Twitter and YouTube. Results for YouTube suggest some children may have been referring to YouTube Kids (for which younger children are allowed a profile) which will have influenced the average percentages.

- Revealing Reality: Research into risk factors that may lead children to harm online (PDF, 826.8 KB). The research was conducted among 42 children, aged 7 to 17, and their parents/carers. It included in-person ethnographic interviews and observation, digital diaries, screen recording and social media tracking, and further follow-up remote interviewing.

- Revealing Reality: Families’ attitudes towards age assurance (PDF, 1.3 MB). The research included in-depth interviews with 18 families, involving media diary tasks, and eight focus groups – four with parents of children of similar ages and four with children in age groups ranging from 13 to 17.

- Yonder Consulting hosted a ‘Serious Game’ pilot trial (PDF, 1.1 MB). Social media platforms publish advice on staying safe online, but there are concerns that children don’t engage with this information. We are today reporting the findings of a small-scale pilot trial which tested the impact of a “serious game” as an approach to helping children stay safer online. It suggests that gamifying information can lead to increased knowledge acquisition and might have a positive influence on online behaviour. The research was conducted among a sample of 600 13–17-year-olds.

The findings of these research studies should not be considered a reflection of any policy position that Ofcom may adopt when we take up our role as the online safety regulator, or of Ofcom’s views towards any online service.

- The age assurance methods explored during the research were:

- Self-declaration: the user states their age or date of birth

- Hard identifiers: the submission of official documentation, or a scan of such documentation, such as a credit card, passport, or driving license

- Facial image analysis (age estimation): a facial image is analysed by an AI system that has been trained on a database of facial images of known ages

- Behavioural profiling and inference (age estimation): the analysis of a user’s service usage behaviours and interactions, which are typically automated, to estimate age

- Parent/guardian confirmation: a user’s age or age range is confirmed by another connected accountholder, for example a parent or guardian, using their account to confirm the ages of their children and their accounts

Share

Facebook

YouTube

Tweet

Twitter

LinkedIn