Amid growing security concerns, social media majors take a stand to make platforms safer

Instagram, YouTube, Facebook and Twitter have taken measures to make their platforms safer for users and brands by introducing new policies, features and updates.

Facebook-owned Instagram has introduced two new safety features to curb the menace of cyber-bullying on the platform. At a grand event on July 8, Adam Moseri, Head of Instagram, introduced these features that prevent bullying from happening on the platform and empower the targets of bullying to stand up for themselves.

Updates from the head of Instagram @mosseri about our commitment to lead the fight against online bullying â¤ï¸ https://t.co/Co2rgsuJvt pic.twitter.com/tlngJftizT

— Instagram (@instagram) July 8, 2019

The new feature uses artificial intelligence (AI) to screen content and notify users if their post may be harmful or offensive. Users will see a message: ‘Are you sure you want to post this?’ and will have the option to remove or change the comment before anyone else is able to see it. The second feature allows people to control their Instagram experience, without notifying someone who may be targeting them.

On this development, Nitin Bhatia, Social Media Expert, Founder NitinSpeaks.com, said, “This is a brilliant attempt from Instagram to take on cyber bullying. What wowed me was how they are going to use technology to cater to this issue. There is no doubt in my mind that this move from them will soon encourage other platforms to follow suit.”

Vishal Chinchankar Chief Digital Officer, Madison India, too, considered it to be a great policy and need of the hour. “While I believe it already exists on Facebook and YouTube, yet this will be a major push to rollout such policies across all other social platforms. Bullying is a major issue in the Indian context in terms of religion, race, caste, sexual orientation, etc., especially on social media platforms and such policies will only help create a safer environment,” he added.

Unsafe Platforms?

Today, these digital platforms are used by people across the world, who end up creating tons of user generated data. While the exchange of information is seamless, there is always a threat of breach of privacy within the minds of users. User and brand safety has always been the prime concern of the digital platforms. Some of the most common forms of threats on these platforms include posting of obscene pictures & videos, data theft, bullying, trolls, fake news and attacking a religion or caste or creed. Unfortunately, over the last few years, people and brands in every part of the world have been victim to such harassment.

Here are some of the recent examples where both people and brand got attacked on different platforms:

Zaira Wasim, a Bollywood actor, decided to quit the industry and focus more on her religious practices. This became a topic of rage among the Twitteratis and the actress faced a lot of flak for her decision. She was mocked, harassed and questioned about her decision.

5 years ago I made a decision that changed my life and today I’m making another one that’ll change my life again and this time for the better Insha’Allah! :) https://t.co/ejgKdViGmD

— Zaira Wasim (@ZairaWasimmm) June 30, 2019

Actual reasons - #MeToo didnt work....Movies Mili nahi...Amir Khan ne aur chance diya nahi

— Suchi Das (@Suchi_Das05) June 30, 2019

This tweet directs u to her Instagram post which has 6 pages. Here's the summary.

— THE SKIN DOCTOR (@theskindoctor13) June 30, 2019

What she wrote : I've realised that AIIIah is everything. I quit bollywood to dedicate myself to AIIIah.

What she meant : Bollywood me koi kaam nahi mil raha ab. AIIIah ka naam leke nikal leti hu.

Recently, Ananya Pandey, another upcoming Bollywood actor, was bullied on Instagram by someone claiming to be her classmate. The latter claimed that Pandey was faking her admission to an international college and further went on to share her personal life information such as boyfriends, flings, education and others. As a result, Pandey posted a response to bully.

https://www.instagram.com/p/BycalZjg_nJ/?utm_source=ig_web_copy_link

HUL’s Surf Excel faced flak for #RangLaayeSang campaign. The ad showed a small girl riding a young Muslim boy to mosque amidst Holi celebrations. A section of social media lauded the message of peace and harmony, but the brand faced a massive backlash from another section, with calls for boycott. Some of the comments included that the brand was promoting love jihad and showing Namaaz to be more important than Holi.

à¥

— Vishvajit Chavan (@Vish_kc) March 17, 2019

Devotees (Hindus) opposing the advt. should be washed properly with Surf Excel ! - Mehbooba Mufti

Why Mehbooba, wishing #Hindus 2 be washed properly, never feel that terrorists should be washed properly?#BoycottSurfExcel

To establish Hindu Rashtra,

join us @HinduJagrutiOrg pic.twitter.com/AVPF2iDIse

Over the years, Facebook similarly has seen issues of crimes against women, when people have created several fake profiles and posted obscene images of women, making it extremely uncomfortable for them. Women have regularly complained about it.

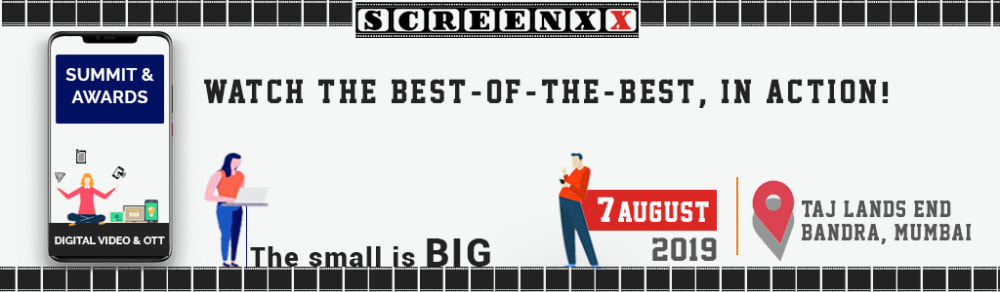

Recently, several big brands pulled out ads from YouTube after a video blogger Matt Watson raised concerns that pedophiles were using the platform to trade information and draw attention to clips of young girls.

In 2017, YouTube faced a full-scale boycott over Google’s inability to ensure ads won’t appear next to hateful and offensive content. However, the platform recovered from it.

Manika Juneja, VP Operations - West & South, WATConsult, opined, “With the growing use of social media, platforms should introduce functionalities that can help in curbing down irresponsible human actions when people convene online. The internet is desensitised to “trolling”, but the effects of it on a person’s well-being could be tremendous.”

Effective Measures

Digital platforms understand that if users do not find these platforms safe, then brands will not look forward to them. In order to keep the advertising dollars coming, these platforms are leaving no stone unturned to ensure safety and privacy of users.

Read more: With promise of easy Tweeting, Twitter revamps Desktop interface

Facebook recently also updated their policy on pictures and videos and reserves the right to limit the visibility of photos and videos that contain graphic content, in order to help share responsibly. A photo or video containing graphic content may appear with a warning to let people know about the content before they view it, and may only be visible to people older than 18. They are also working with governments and institutions to remove fake profiles, especially after the Cambridge Analytica debacle, where valuable information about users was compromised.

YouTube has updated the platform to make it safer for minors and developed a policy against hate speech. These include rules around live streaming, comments and recommendations in regard to minors who use the platform. YouTube has shifted all kids content to YouTube Kids.

WATConsult’s Juneja believes that the Instagram’s new policy is more focused towards personal profiles than brand pages. “Brands, like humans, have the power to moderate content on their pages to protect their follower base. It is a healthy practice for the brands to highlight the page or community policy that users can refer to while engaging with a brand on social media. The new changes will work in their favor considering this will alarm users’ actions and conversations across any brand or personal pages.”

These digital platforms are extensively using the AI technology as it establishes the fact that AI can help identify user actions and thus include/ exclude such audiences. AI will help to take a step towards operational enforcement of such policies. However, the human interface will still be required to master AI to solve complex nuances.

Bhatia explained that AI could be used to solve a wide variety of complex issues plaguing social media. “We need to also accept that Instagram might not be the first one to introduce something like this for tacking social issues. We already have seen Facebook and Twitter attempting to use AI for tackling fake news. What’s encouraging is that AI is now catching the eye of developers for betterment of the society and that’s a good thing.”

Now that social media has become embedded into the fabric of our society, thus it is the users who need to become self-aware and watch their actions on social media.

Speaking on how people are tackling this issue, Juneja said, “Some people are opting in for micro-habits like social media detox, while others are restricting the time spent on a platform or indulging only on the right platforms for their wellbeing. These content moderation policies and features like Restrict will act as alarm and prevention tools.”

Chinchankar concluded by saying, “Change can happen only if the social platforms educate the consumer on such policies, for instance, the latest WhatsApp commercial, which depicted the platform as a place to spread happiness and not harmful messages.”

Share

Facebook

YouTube

Tweet

Twitter

LinkedIn