Amazon trains massive AI model 'Olympus' to rival OpenAI, Alphabet

Amazon has formed a dedicated team to train a massive AI model called "Olympus," according to a Reuters report.

Amazon is making a significant financial investment in this large language model (LLM) with the hope that it can compete with leading models developed by OpenAI and Alphabet, according to insider information.

The "Olympus" model is said to possess a staggering 2 trillion parameters, potentially making it one of the largest models under development. In comparison, OpenAI's renowned GPT-4 model is reported to have one trillion parameters.

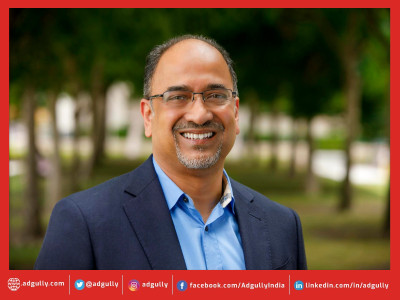

Rohit Prasad, the former head of Alexa, is leading the team responsible for "Olympus" and now reports directly to CEO Andy Jassy. In his role as the head scientist for general artificial intelligence (AGI) at Amazon, Prasad has brought in researchers who were previously engaged with Alexa AI and the Amazon science team to collaborate on model training, thus consolidating AI efforts across the company with dedicated resources.

Already Amazon has trained smaller models, such as Titan, and has also established partnerships with AI model startups like Anthropic and AI21 Labs, making these models available to users of Amazon Web Services (AWS).

The insiders indicate that there is no specific timeline for the release of the new model.

LLMs serve as the foundational technology for AI tools that learn from extensive datasets to generate responses akin to those of humans. Training larger AI models is a costly endeavor due to the substantial computing power required. In an earnings call in April, Amazon executives expressed their intention to increase investments in LLMs and generative AI while reducing expenditures in their retail business related to fulfillment and transportation.

Share

Facebook

YouTube

Tweet

Twitter

LinkedIn