Prevalence of hate speech, graphic content and bullying dropped in Q4 2020: FB

Facebook published its Community Standards Enforcement Report for Q4 2020. The report provides metrics on how the company enforced policies from October through December, including metrics across 12 policies on Facebook and 10 on Instagram.

This quarter, hate speech prevalence dropped from 0.10-0.11% to 0.07-0.08%, or 7 to 8 views of hate speech for every 10,000 views of content. The prevalence of violent and graphic content also dropped from 0.07% to 0.05% and adult nudity content dropped from 0.05-0.06% to 0.03-0.04%.

These improvements in prevalence are mainly due to changes made to reduce problematic content in News Feed.

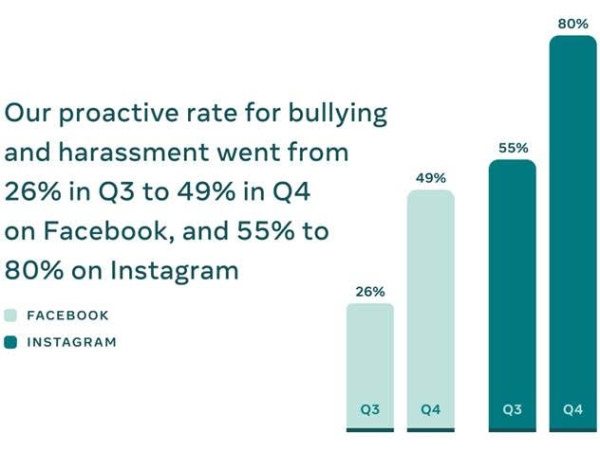

Facebook’s proactive rate, the percentage of content we took action on that we found before a user reported it to us, improved in certain problem areas, most notably bullying and harassment. The proactive rate for bullying and harassment went from 26% in Q3 to 49% in Q4 on Facebook, and 55% to 80% on Instagram. The company made improvements to AI in areas where nuance and context are essential, such as hate speech or bullying and harassment.

Guy Rosen, VP Integrity, Facebook noted, “We’re slowly continuing to regain our content review workforce globally, though we anticipate our ability to review content will be impacted by COVID-19 until a vaccine is widely available. With limited capacity, we prioritize the most harmful content for our teams to review, such as suicide and self-injury content.”

In Q4, Facebook took action on:

- 6.3 million pieces of bullying and harassment content, up from 3.5 million in Q3 due in part to updates in our technology to detect comments

- 6.4 million pieces of organized hate content, up from 4 million in Q3

- 26.9 million pieces of hate speech content, up from 22.1 million in Q3 due in part to updates in our technology in Arabic, Spanish and Portuguese

- 2.5 million pieces of suicide and self-injury content, up from 1.3 million in Q3 due to increased reviewer capacity

In Q4, Instagram took action on:

- 5 million pieces of bullying and harassment content, up from 2.6 million in Q3 due in part to updates in our technology to detect comments

- 308,000 pieces of organized hate content, up from 224,000 in Q3

- 6.6 million pieces of hate speech content, up from 6.5 million in Q3

- 3.4 million pieces of suicide and self-injury content, up from 1.3 million in Q3 due to increased reviewer capacity

In 2021, the company is planning to share additional metrics on Instagram and add new policy categories on Facebook. Last year, Facebook committed to undertake an independent, third-party audit of their content moderation systems to validate the numbers they publish which will begin this year.

Share

Facebook

YouTube

Tweet

Twitter

LinkedIn